Throughout the renewed and intensifying Israel-Hamas conflict, a controversial narrative has emerged, suggesting the use of artificial intelligence (AI) to manipulate graphic images, further complicating an already sensitive and contentious situation.

The adage that “all warfare is based on deception” has taken on new dimensions, with allegations of misinformation, media manipulation, and AI-generated content aimed at swaying public opinion.

Over the years, a term known as “Pallywood” has come to represent a perceived propaganda war waged by some Palestinians against Israel, characterized by performative acts, media manipulation, and distortion.

Recent reports and disturbing images have sparked fresh controversy, as graphic images of dead Israeli babies, including some decapitated and burnt, were superimposed into the narrative of the conflict.

These images, initially shared by Israeli Prime Minister Benjamin Netanyahu, raised concerns about their authenticity and origin.

Netanyahu shared these horrifying images on a social media platform, cautioning viewers with the words: “Here are some of the photos Prime Minister Benjamin Netanyahu showed to US Secretary of State Antony Blinken. Warning: These are horrifying photos of babies murdered and burned by the Hamas monsters.”

The disturbing photos displayed tiny, mangled bodies, including a blurred-out infant in a bloody onesie.

Netanyahu’s statement, “Hamas is inhuman. Hamas is ISIS,” added to the shock and outrage sparked by the images.

The controversy unfolded one day after Netanyahu had shared a picture of a child’s bloody bed, underscoring the grim nature of the conflict.

Israeli military officials alleged that Hamas terrorists had slaughtered at least 40 babies and young children, with reports suggesting that some were decapitated.

Major Nir Dinar, a spokesperson for the Israel Defense Forces, described the discovery of decapitated infant bodies at Kfar Aza. However, exact details regarding the number of affected infants and the extent of the decapitations remained unspecified.

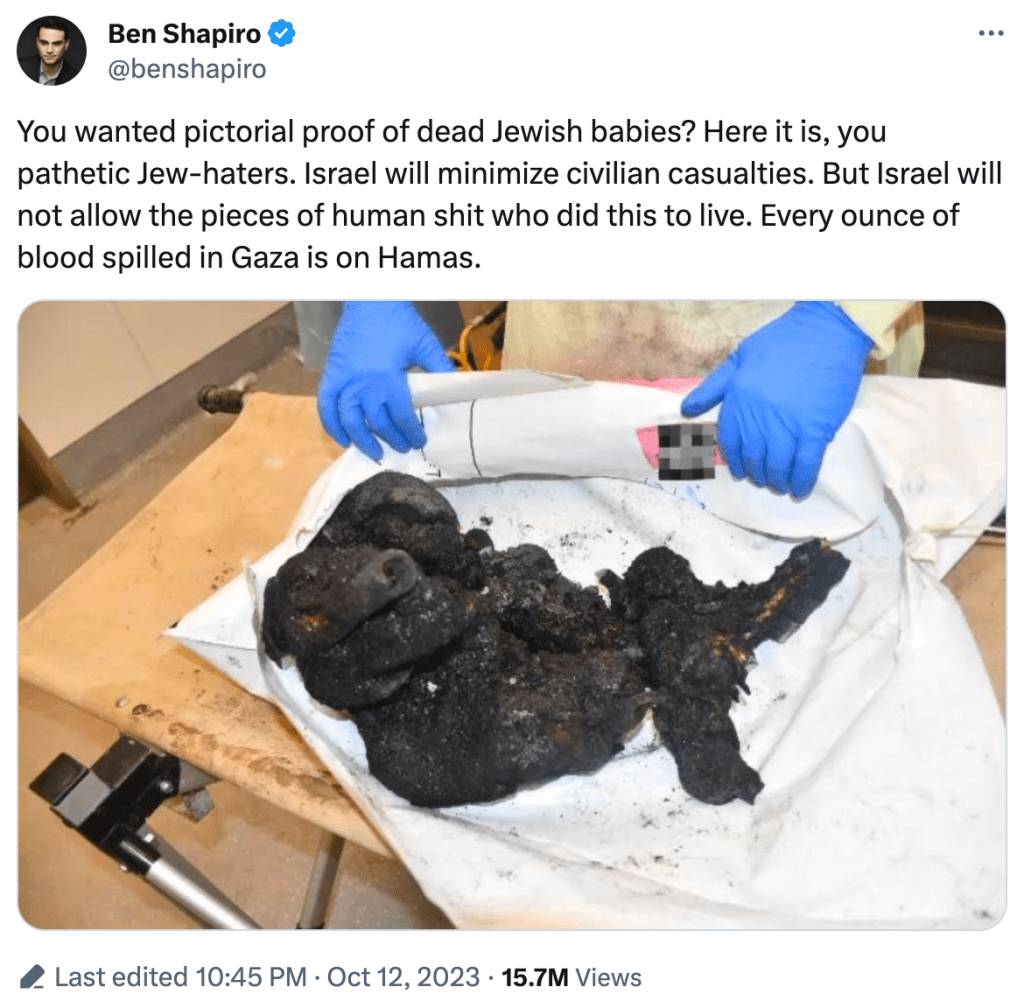

The shocking story of burned and decapitated babies quickly gained notoriety and was amplified by American Jewish conservative pundit Ben Shapiro, who shared one of the photos from Netanyahu’s post.

This image garnered over 16 million views at the time of this report.

Shapiro’s post, featuring a photograph of a burned baby, included a vehement statement: “You wanted pictorial proof of dead Jewish babies? Here it is, you pathetic Jew-haters. Israel will minimize civilian casualties. But Israel will not allow the pieces of human excrement who did this to live. Every ounce of blood spilled in Gaza is on Hamas.”

The controversy deepened when some Hamas supporters and sympathizers claimed that the image of the burned baby shared by Shapiro and Netanyahu was AI-generated.

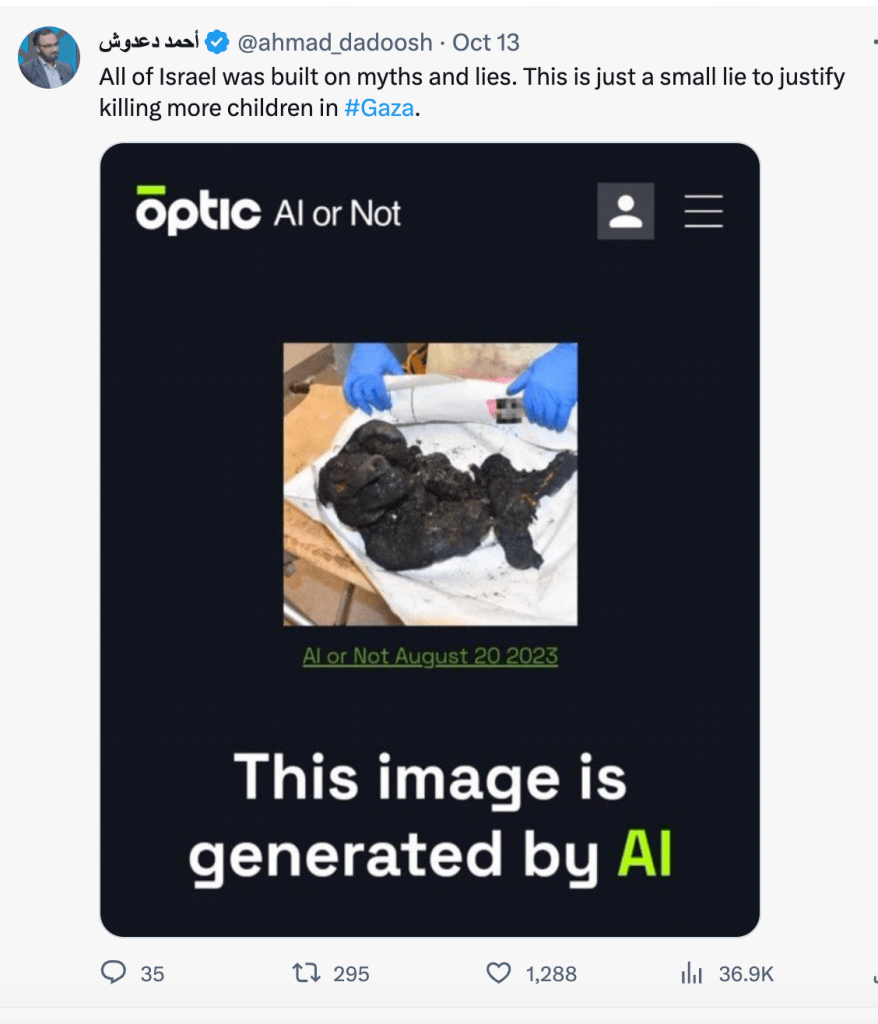

In response, a verified user named Hamad Daadoush, whose posts appear to be pro-Hamas and anti-Israel, dismissed the images as a form of deception.

He stated, “All of Israel was built on myths and lies. This is just a small lie to justify killing more children in #Gaza.”

The counter-propaganda campaign appeared to gain traction, even among those who sympathized with Israel, leading to doubts about the authenticity of the images.

Dom Lucre, a Donald Trump supporter with a substantial following, shared the following statement: “DEVELOPING: Ben Shapiro’s image of ‘dead Jewish babies’ is now being examined for possible AI alterations, resembling the dog on the right. It’s essential to mention that the Israeli Prime Minister, Benjamin Netanyahu, initially posted the image on the left, and Shapiro’s post was the one that the community took note of.”

Indeed, Shapiro’s widely-circulated post received a Community Note indicating that the photo was “AI-generated.”

However, this Community Note was subsequently removed following the involvement of AI experts who contributed to the conversation, aiming to clarify the controversy.

Further complicating the matter, individuals with expertise in AI questioned the reliability of AI detection tools used to determine image authenticity.

A user named Dr. Vox Oculi, who claimed to be an “AI hobbyist,” highlighted the inherent unreliability of such tools, noting variations in results and the potential for false negatives due to post-production manipulations and image quality issues.

To support the argument that AI detectors can be unreliable, Dr. Vox Oculi pointed out the profile photo of conservative Laura Loomer, which was initially determined to be AI-generated. However, further investigation revealed inconsistencies in the results, suggesting the tool’s limitations.

Intriguingly, a similar image, substituting a puppy for the baby, emerged on 4chan shortly after the initial images were shared.

Dr. Oculi stated: “It’s easy to look at this and think, oh yes, it was actually a photo of a puppy rescue that was AI modified to look like a burnt baby, case closed. But this is where you’re wrong.“

An analysis conducted by The Arlington Post, utilizing Error Level Analysis (ELA), revealed distinct differences in compression artifacts between the two images.

While the image of the burned baby displayed consistent compression patterns and unedited attributes, the puppy image exhibited signs of digital tampering.

As this controversy unfolded, it became evident that AI’s role in generating and detecting images added a layer of complexity to an already challenging conflict, where the lines between fact and fiction have become increasingly blurred.

The accusations of AI-generated images also had broader implications, as they played into the hands of Hamas supporters and garnered criticism from some U.S. politicians, such as Alexandria Ocasio-Cortez, who condemned what she described as pro-Israeli “misinformation.”

In response to the removal of a Community Note that had disputed the authenticity of Shapiro’s post, the pundit labeled a critic as a “liar.”

The critic, Jackson Hinkle, had previously mocked Shapiro’s post featuring a “burnt baby corpse” and had received a Community Note in response.

The Community Note, which initially cast doubt on the authenticity of the images, now asserted that AI detection websites were known to be “VERY UNRELIABLE,” highlighting the ongoing challenges in distinguishing AI-generated content from real imagery.

In a further twist, a user claiming to be a programmer and artist contested the reliability of AI detection tools, noting their poor track record and the ongoing debates within the artistic community regarding their accuracy.

As a final illustration of the challenges faced in AI detection, some users posted a screenshot of Hinkle’s profile photo, which indicated that it was 73% artificial and 27% human, underlining the uncertainties surrounding AI-based image analysis in this evolving digital landscape.